Speeding Up Mandelbrot Set with ARM NEON on Linux

September 10, 2025

Intro

In Part 1 of this series, we explored how to render pixels directly into the Linux framebuffer using ARMv7-A assembly. To demonstrate these techniques, I created a scalar implementation of the Mandelbrot set. The main goal of this article is to see whether we can write vectorized assembly to speed up the computation. I’ll be experimenting on the board with i.MX6DL, which gives us the opportunity to harness ARM’s SIMD engine - NEON. Together, we’ll find out if Neon can really light up 🙄 our solution. Let’s start with a short introduction on what is SIMD and what is NEON. And after that - how it all connects to computing the Mandelbrot set.

SIMD and ARM Neon

In this part we'll go over basic terminology used in this article. If you know this feel free to skip to the next chapter.

SIMD stand for Single instruction, multiple data and describes a computer that performs same operation over multiple pieces of data at the same time. SIMD is closely related to the concept of data level parallelism, where computational load is distributed across different nodes that perform exactly the same operation.

The processors that implement SIMD are ofter called vector processors because the have instruction set designed to efficiently operate on one-dimensional arrays or vectors. Hence the approach to improving code performance via SIMD is called vectorization.

Multimedia loads can very often benefit from SIMD approach. So most modern CPU and MPU designs include SIMD instructions to improve the performance of multimedia use. NXP i.MX6DL as a Cortex-A9 is not an exception and it implements ARM NEON.

ARM NEON is an advanced SIMD architecture extension. NEON registers can considered as vectors of elements of the same data type, with NEON instructions operating on multiple elements simultaneously.

What it means in practice is that our MPU has a set of special 128-bit registers q0...q15 and provides a range of instructions to manipulate them. The way registers are organized is a little more complicated however:

You can see that s0...s31 float register range from Part 1 is mapped into 64-bit wide d0...d15 and 128-bit wide q0...q15. Actually the FPU on this processor is a part of NEON silicon and the fact that s0...s31, d0...d15 and q0...q15 have mapping into each other will come in handy later. Also, besides floating-point, NEON supports multiple data types such as signed and unsigned integers.

Ok, so we're ready to get started. But, if vectorizing our code gives us an improvement in performance, how do we know? So before diving into rewriting our rendering algorithm using NEON let's talk a bit about profiling our code to measure runtime.

Profiling ARM assembly on Linux

The most clinical way to measure execution time of ARM assembly would be to use Performance Monitoring Unit (PMU).

The PMU can be thought of as a set of registers that expose cycle counters and performance events (cache misses, branch misses, stalls), providing access to a very low-level performance information. All the registers can be seen here. However, the PMU registers can only be accessed directly in privileged mode. Without rebuilding the kernel, userspace can access it via perf_event_open() syscall. Even that might be problematic depending on the kernel config and level in /proc/sys/kernel/perf_event_paranoid.

Another approach and the one we're going to take is to simply use clock_gettime() syscall. It measures wall-clock with microsecond resolution (which is more than enough for our application), works entirely in user space and is universally available on Linux. This approach is simpler for measuring our Mandelbrot runtime and more portable.

Here's the full source code implementing skeleton of timing routine (actual code to profile is omitted):

/* -------------------------------------------------------------------

* Using clock_gettime() from ARMv7-A assembly

* ------------------------------------------------------------------*/

.syntax unified

.text

.global _start

_start:

/* Reserve space on stack for 2 timespec structs */

sub sp, sp, #16 @ 2 * sizeof(timespec)

mov r4, sp @ start timespec

add r5, r4, #8 @ end timespec

/* clock_gettime(CLOCK_MONOTONIC, &start) */

mov r7, #263

mov r0, #1

mov r1, r4

svc #0

/*

* Code to profile goes here: |

* <--------------------------/

*/

/* clock_gettime(CLOCK_MONOTONIC, &end) */

mov r7, #263

mov r0, #1

mov r1, r5

svc #0

/* Compute delta_ns */

ldr r0, [r4] @ start sec

ldr r1, [r4, #4] @ start nsec

ldr r2, [r5] @ end sec

ldr r3, [r5, #4] @ end nsec

sub r2, r2, r0

sub r3, r3, r1

/* Convert sec_diff to nanoseconds */

ldr r0, =1000000000

mul r2, r2, r0

add r0, r2, r3 @ r0 = total ns

/* Convert nsec to ASCII string */

ldr r1, =buf+20 @ write digits backward

mov r2, #0

mov r6, #10

itoa_loop:

movw r3, #26215

movt r3, 26214 @ reciprocal, for r0/10

smull r9, r3, r3, r0

asrs r9, r3, #2

asrs r3, r0, #31

subs r4, r9, r3

mls r5, r4, r6, r0 @ r5 = r0 - r4*10

add r5, r5, #'0' @ convert to ASCII

strb r5, [r1, #-1]! @ store to timebuf

mov r0, r4

cmp r0, #0

bne itoa_loop

/* Write the ASCII string */

ldr r2, =buf+20

sub r2, r2, r1 @ length

mov r7, #4 @ write timebuf

mov r0, #1

mov r3, r2

svc #0

ldr r1, =newline

mov r2, #1

mov r7, #4 @ write newline

mov r0, #1

svc #0

mov r7, #1 @ exit(0)

mov r0, #0

svc #0

.data

buf: .space 20

newline:.ascii "\n"

In this approach we store 2 timespec structs on the stack and write start and end time of our execution path into those.

After that we compute the delta and print it with somewhat bastardized version of itoa.

One interesting thing however. When converting to ASCII we need to divide our nanoseconds by 10 to isolate the last digit. As mentioned previously, ARM MPUs generally didn't include a hardware division instruction until ARMv8. I guess ARM had another priorities. Without it, division by constant becomes a slow and verbose loop. Here we can use reciprocal multiplication in place of our division by 10.

Since I'm not a modular arithmetic wizard I can't conjure the right reciprocal out of my head. Luckily, no one has to. By using amazing Compiler Explorer you can just punch in int a = x / 10, pick your target architecture (ARM 32bit) and let the compiler do the heavy lifting. It will show you the actual assembly the compiler generates:

itoa_loop:

movw r3, #26215

movt r3, 26214 @ reciprocal, for r0/10

smull r9, r3, r3, r0

asrs r9, r3, #2

asrs r3, r0, #31

subs r4, r9, r3

This code divides r0 by 10 and stores the result in r4.

At this point we can start vectorizing our algorithm. Armed with this knowledge, we can be sure we know exactly how much faster the new approach is versus the old one.

Vectorized Mandelbrot

So we know how to write a scalar implementation of Mandelbrot set. In order to create a vector implementation we need a change of perspective. Instead of processing a single pixel per loop iteration, we rewrite the Mandelbrot algorithm to take advantage of the full width of the NEON registers.

The key idea is to evaluate four pixels in parallel, loading them into a single vector and advancing them side by side. Rather than computing each pixel independently, we attempt to derive the final color for those simultaneously, achieving performance boost.

The number 4 comes from the fact that this is how many single-precision floats we can fit into a 128-bit NEON register. After we done with the first 4 pixels we can move on to the next 4 and repeat until done. See the Figure 2:

Figure 2. Coordinate vector iteration

Let's dive into the implementation. Let's start with the full code right away and after that comment on interesting NEON parts:

/* -------------------------------------------------------------------

* Mandelbrot set renderer using ARM Neon

* ------------------------------------------------------------------*/

.syntax unified

.text

.global _start

.equ WIDTH_PX, 1280

.equ HEIGHT_PX, 800

.equ MAX_ITER, 200

/* Precomputed reciprocal scale factors */

wrecf: .single 0.00078125 @ 1 / WIDTH_PX

hrecf: .single 0.00125 @ 1 / HEIGHT_PX

/* -------------------------------------------------------------------

* This entry point will need to be passed into linker

* ------------------------------------------------------------------*/

_start:

bl open_fb @ open /dev/fb0, fd in r0 on success

cmp r0, #0

bmi exit1 @ exit(1) if open() failed

mov r6, r0 @ save fb0 fd

/* Reserve space on stack for 2 timespec structs */

sub sp, sp, #16 @ 2 * sizeof(timespec)

mov r10, sp @ start timespec

add r11, r10, #8 @ end timespec

/* clock_gettime(CLOCK_MONOTONIC, &start) */

mov r7, #263

mov r0, #1

mov r1, r10

svc #0

/* Begin computation */

vmov.f32 s0, #-2.0 @ xmin

vmov.f32 s1, #1.0 @ xmax

vmov.f32 s2, #-1.0 @ ymin

vmov.f32 s3, #1.0 @ ymax

vsub.f32 s4, s1, s0

vldr.f32 s5, wrecf

vmul.f32 s4, s4, s5 @ xmul

vsub.f32 s5, s3, s2

vldr.f32 s6, hrecf

vmul.f32 s5, s5, s6 @ ymul

mov r5, #MAX_ITER

vdup.u32 q14, r5 @ MAX_ITER

vmov.f32 q3, #4.0

vmov.f32 q10, #2.0

vmov.u32 q15, #1

mov r5, #0 @ y = 0

y_loop:

cmp r5, HEIGHT_PX

bge write_buf

/* compute cim */

vmov.f32 s6, r5

vcvt.f32.u32 s6, s6

vmul.f32 s6, s6, s5

vadd.f32 s6, s6, s2

vdup.f32 q2, d3[0] @ cim vector

ldr r8, =xinit

vldm r8, {q4}

mov r4, #0 @ x = 0

x_loop:

cmp r4, WIDTH_PX

bge x_end

/* compute cr vector */

vmul.f32 q5, q4, d2[0]

vdup.f32 q6, d0[0]

vadd.f32 q5, q5, q6 @ cr vector

/* set zr, zim = 0 */

veor.f32 q6, q6, q6 @ zr vector

veor.f32 q7, q7, q7 @ zim vector

veor.u32 q11, q11, q11 @ iterations = 0

loop:

vmul.f32 q8, q6, q6 @ zr^2

vmul.f32 q9, q7, q7 @ zim^2

vadd.f32 q12, q8, q9

vclt.f32 q12, q12, q3 @ check zr^2 + zim^2 < 4

vclt.u32 q13, q11, q14 @ check iterations < MAX_ITER

vand q12, q13, q12 @ mask of active lanes

vorr d26, d24, d25

vpmax.u32 d26, d26, d26

vmov r9, d26[0] @ extract masked lanes

cmp r9, #0

beq loop_done @ continue if any lanes remain

vmul.f32 q7, q6, q7

vmul.f32 q7, q7, q10

vadd.f32 q7, q7, q2 @ update zim

vsub.f32 q6, q8, q9

vadd.f32 q6, q6, q5 @ update zr

vand q12, q15, q12

vadd.u32 q11, q11, q12 @ increment iterations by mask

b loop

loop_done:

vshr.u32 q12, q11, #6

vadd.u32 q12, q12, q15

vmov.u32 q13, #5

vmul.u32 q12, q13, q12

vshl.u32 q11, q11, q12 @ iterations vector

vmovn.u32 d24, q11

bl set_pixvec @ store iter vector to memory

vadd.f32 q4, q4, q3 @ update x vector

add r4, r4, #4

b x_loop

x_end:

add r5, r5, #1

b y_loop

/* -------------------------------------------------------------------

* Write final buffer to framebuffer with pwrite64(fd, buf, count, pos)

* ------------------------------------------------------------------*/

write_buf:

/* clock_gettime(CLOCK_MONOTONIC, &end) */

mov r7, #263

mov r0, #1

mov r1, r11

svc #0

mov r0, r6

ldr r1, =buf

mov r2, WIDTH_PX * HEIGHT_PX * 2

mov r3, #0

mov r4, #0

mov r5, #0

mov r7, #0xB5 @ pwrite64()

svc #0

print_time:

/* Compute delta */

ldr r0, [r10] @ start sec

ldr r1, [r10, #4] @ start nsec

ldr r2, [r11] @ end sec

ldr r3, [r11, #4] @ end nsec

sub r2, r2, r0 @ sec_diff

sub r3, r3, r1 @ nsec_diff

ldr r0, =1000000000

mul r2, r2, r0

add r0, r2, r3 @ r0 = total ns

/* Convert nsec to ASCII string */

ldr r1, =timebuf+20

mov r2, #0

mov r6, #10

itoa_loop:

movw r3, #26215

movt r3, 26214 @ reciprocal, for r0/10

smull r9, r3, r3, r0

asrs r9, r3, #2

asrs r3, r0, #31

subs r4, r9, r3

mls r5, r4, r6, r0 @ r5 = r0 - r4*10

add r5, r5, #'0' @ convert to ASCII

strb r5, [r1, #-1]! @ store to timebuf

mov r0, r4

cmp r0, #0

bne itoa_loop

/* Write the ASCII string */

ldr r2, =timebuf+20

sub r2, r2, r1 @ length

mov r7, #4 @ write timebuf

mov r0, #1

mov r3, r2

svc #0

ldr r1, =newline

mov r2, #1

mov r7, #4 @ write newline

mov r0, #1

svc #0

b exit0

/* -------------------------------------------------------------------

* store pixel vector [xN, xN+1, xN+2, xN+3] to memory

* ------------------------------------------------------------------*/

set_pixvec:

push {r4-r6}

ldr r0, =buf

mov r6, WIDTH_PX

mla r5, r5, r6, r4

lsl r4, r5, #1

add r0, r0, r4

vst1.16 {d24}, [r0] @ store neon 64-bit vector as 16-bit shorts

pop {r4-r6}

bx lr

open_fb:

ldr r0, =fbdev

mov r1, #2 @ O_RDWR

mov r7, #5 @ open()

svc #0

bx lr

close_fb:

mov r7, #6 @ close()

svc #0

bx lr

exit1:

bl close_fb

mov r0, #1

mov r7, #1 @ exit(1)

svc #0

exit0:

bl close_fb

mov r0, #0

mov r7, #1 @ exit(0)

svc #0

.data

.align 16

xinit: .single 0.0, 1.0, 2.0, 3.0 @ important to align this to 128-bit

buf: .space WIDTH_PX * HEIGHT_PX * 2

timebuf: .space 20

newline: .ascii "\n"

fbdev: .asciz "/dev/fb0"

.end

Let's go over this listing. From Figure 2 above, it's clear that besides using a r register for tracking x coordinate we also need a vector of 4 elements to do the computation.

See how vector is aligned at 16 byte boundary (widtht of q4) and initialized with the first 4 pixels. Data alignment is very important for SIMD instructions:

ldr r8, =xinit

vldm r8, {q4}

mov r4, #0 @ x = 0

.data

.align 16

xinit: .single 0.0, 1.0, 2.0, 3.0 @ important to align this to 128-bit

Initializing a NEON vector to the same value in all lanes can be done by via 'vdup' which duplicates a scalar register into all lanes:

vdup.u32 q14, r5 @ MAX_ITER

There's a faster way to set it to all 0s - by xoring a vector with itself which also doesn't need extra setup:

veor.f32 q6, q6, q6 @ zr vector

veor.f32 q7, q7, q7 @ zim vector

veor.u32 q11, q11, q11 @ iterations = 0

To illustrate how SIMD operations work in general let's pick the following line:

vadd.f32 q12, q8, q9

Here's the basic illustration what's going on in when we add q8 and q9 together:

Figure 3. Adding two NEON vectors

When debugging vectorized code, it’s often required to inspect the contents of SIMD registers directly. GDB can help with that. Imagine we are looking inside the main loop:

(gdb) l 89,90

89 vclt.f32 q12, q12, q3 @ check zr^2 + zim^2 < 4

90 vclt.u32 q13, q11, q14 @ check iterations < MAX_ITER

With the following print commands in GDB we can see the contents of register q12 that tracks our |Z| and compare it with q3:

(gdb) p (float[4])$q12

$10 = {4.01904202, 4.00289488, 3.98682976, 3.97084403}

(gdb) p (float[4])$q3

$11 = {4, 4, 4, 4}

See how first two points already escaped, but the last 2 are still iterating. Remember this detail.

We can also look at the register as a whole:

(gdb) i r q12

q12 {u8 = {0xfe, 0x9b, 0x80, 0x40, 0xb7, 0x17, 0x80, 0x40, 0x38, 0x28, 0x7f, 0x40, 0x4f, 0x22, 0x7e, 0x40},

u16 = {0x9bfe, 0x4080, 0x17b7, 0x4080, 0x2838, 0x407f, 0x224f, 0x407e},

u32 = {0x40809bfe, 0x408017b7, 0x407f2838, 0x407e224f},

u64 = {0x408017b740809bfe, 0x407e224f407f2838}, f32 = {0x4, 0x4, 0x3, 0x3}, f64 = {0x202, 0x1e2}}

From the previous article we know that important part of the computation is checking the following condition:

while (z_real * z_real + z_imag * z_imag < 4 && iterations < max_iterations).

There's no conditional branch on vector comparison in NEON. We need to check every lane and somehow compress the result of comparison into a scalar register, upon contents of which we can branch to exit the loop. Here's one way to do it:

vclt.f32 q12, q12, q3 @ check zr^2 + zim^2 < 4

vclt.u32 q13, q11, q14 @ check iterations < MAX_ITER

vand q12, q13, q12 @ mask of active lanes

vorr d26, d24, d25

vpmax.u32 d26, d26, d26

vmov r9, d26[0] @ extract masked lanes

cmp r9, #0

beq loop_done @ continue if any lanes remain

We use vclt - "vector compare less than", vand and vorr - bitwise AND and OR. Then utilize the fact that q and d registers map into each other to extract and compress the comparison result into r9. Here's the diagram that illustrates what's going on:

With the details laid out, the next step is to measure what we're after from the start - performance.

Performance comparison

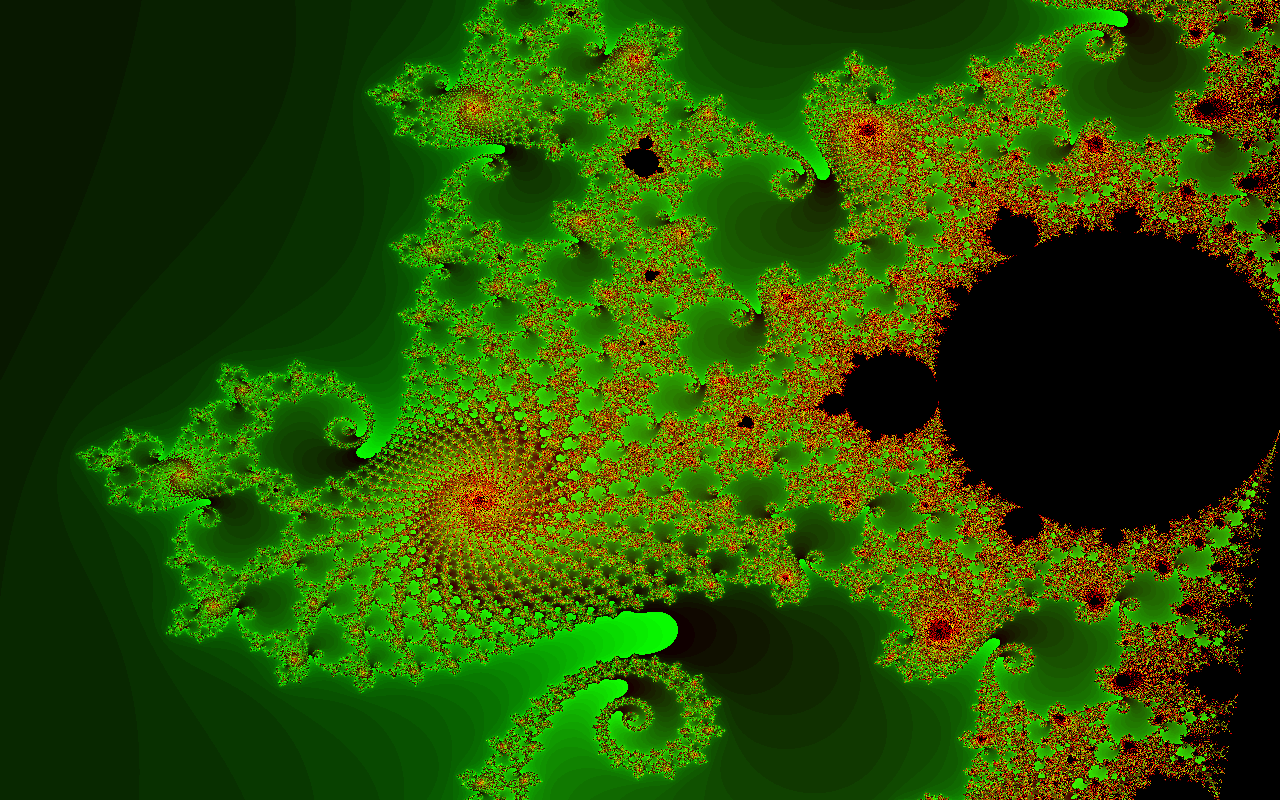

Before we start comparing the numbers, let’s take a moment to appreciate a zoomed-in section of the Mandelbrot set for its aesthetic qualities.

What is it they think they’ll see,

In the realm of the noble Cabiri?

They’re gods! But wondrously strange,

Always causing their forms to change,

Never knowing what they might be.

-Goethe, Faust II

Figure 5. A region of Mandelbrot set rendered with NEON (from device framebuffer)

Finally, lets check how much performance we managed to squeeze by porting into NEON. Here's the runtime comparison while rendering the same region of complex plane, with 200 iterations limit for both algorithms:

| Avg. scalar time, s | Avg. vectorized time, s | Speedup |

|---|---|---|

| 1.91818 | 0.921042 | 2.08 |

A bit slower than expected... Let's spend a second and see what's going on here. Remember that since the scalar approach computed single float at a time and our SIMD architecture allows to compute 4x 32-bit lanes at a time, the theoretical speedup ceiling for NEON is 4x. We can see that in practice Mandelbrot never quite hits that ceiling.

That’s because the iteration count is different for most adjacent pixels. Some points escape quickly, while others require many more steps. This forces the vector lanes out of sync, leaving some waiting while others are still computing, thus decreasing the lane utilization. Than there's extra overhead - checking if point is escaped in the inner loop is more expensive in the SIMD approach. Overall, the result is a respectable gain nevertheless.

Conclusion

In this second part we pushed the Linux framebuffer further by using ARM NEON to accelerate the rendering of the Mandelbrot set. We explored the limits of SIMD algorithms when dealing with mathematical workloads and touched upon time profiling in Linux.

With this, the series comes to an end. We started with the basics of drawing to /dev/fb0, looked at some assembly tricks and finally covered ARM SIMD extensions and how they fit into the picture. For me personally, framebuffer was one of the first Linux interfaces I learned about and it remains dear to my heart.

Whether you were here to look at the Linux framebuffer internals, dig deeper into ARM assembly, or just see a cool fractal, I hope this series gave you something practical or maybe even inspiring. Source code for this series can be found at my Github.